Diversity and Inclusion Through the Lenses of Artificial Intelligence

WISE Young Professionals’ Board Member, Frida Nzaba discusses the importance of having diversity and inclusion in robotics and AI to ensure a more inclusive future for all.

What is Diversity and Inclusion?

Diversity is defined as ‘any traits and characteristics that make people unique’. For example, gender, race and ethnicity, geographic origin, religious beliefs or sexual orientation. It is also believed that each person carries with them a diverse set of experiences and perspectives and this is what sparks diverse thinking in the workplace. Inclusion then builds on this and can be defined as treating people equally and with respect, ensuring they feel valued. A report published by Deloitte (2018), stated ‘inclusion is experienced when people believe their unique and authentic self is valued by others, while at the same time having a sense of belonging to a group’ (Bourke & Dillon, 2018).

Importance of Diversity and Inclusion

Diversity and Inclusion (D&I) are both powerful together and work in tandem. Alan Joyce, CEO of Qantas, emphasised on the importance of having a diverse environment and an inclusive culture. Both of these got them through tough times and ‘diversity generated better strategy, better risk management and better outcome’ (Bourke & Dillon, 2018). Organisations with inclusive cultures are twice as likely to meet or exceed financial targets, six times more likely to be innovative and overall, eight times more likely to achieve better business outcomes (Bourke, 2016).

In the past few years, the importance of Diversity & Inclusion in the workplace has significantly increased with so many companies embedding Diversity & Inclusion in their strategies and targets. Various studies highlight how diversity and inclusion in organisations has a positive effect on the bottom line, yields higher employee engagement, reduces employee turnover, increases creativity, results in faster problem-solving and improves company reputation (Zojceska, 2018).

Artificial Intelligence in recruitment

What role does Artificial Intelligence (AI) play in diversity and inclusion? AI has the power to minimise unconscious biases in hiring processes, promotions, performance appraisals, employee retention and talent management etc – thus facilitating more diverse and inclusive workplaces.

Stop, pause, and reflect – Author’s reflection

2020 has been full of surprises in quite a short period of time. I reflect on how the pandemic left thousands of people facing redundancies, and how this intersected with the Black Lives Matter movement. The combination of both these things left me and other black colleagues questioning whether we would be made redundant and if so, how challenging would it be to go through a recruitment process for another job. Would we have to shorten our names or use our Christian names to give us a better chance of passing a CV screening without biases from recruiters? Would we have to tweak our CV to contain fewer personal elements that could be used to discriminate against us?

Machines are not naturally biased – they learn from humans

Artificial Intelligence is defined as ‘the development of computers’ ability to engage in human-like thought processes such as learning, reasoning and self-correction’ (Nico Kok, 2009). IBM reports 65% of Human Resource professionals believe that AI will be able to support them in talent acquisition and mitigate any biases (Zhang, et al., 2019).

Big data is another growing field that supports AI. Being able to capture huge amounts of data and interpret it for organisations (predictive analytics) is proving to be useful when it comes to diversity and inclusion (Baker, 2013). Predictive analytics work by applying formulas and equations to raw data which can predict what will happen in the future. The best predictive models are derived from combining different sets of data which have been selected by humans. However, one of the challenges behind this is that predictive analytics makes assumptions and performs tests based on past data; if this data is slightly biased it will likely reflect in the outcome.

Various companies are exploring AI for their HR department because machines do not have inherent biases like humans; they work much faster and efficiently at screening potential candidates. AI works from historic / inputted data and algorithms established by the developers. Therefore, if the data and algorithms are deployed in the correct manner, it means that AI can learn to detect biases and report these back to an organisation to assist them in making fair decisions. According to Zhang, et al. (2019) AI can present equal access to job opportunities by using technology. It allows candidates to learn about their skills and interest in order to match them up with job opportunities they would have not thought about. This is a great way to bring in fresh and diverse talent into an organisation. It can also support inclusive job descriptions by detecting any biased language that is used and can re-word this to suit a wider range of candidates. For example, it is known that women are less likely than men to apply for roles they feel they do not meet the majority of the criteria for. Therefore, AI can support in highlighting the skills that are critical to the role without including irrelevant information on the job advert that may be gender biased.

Even though AI has many advantages when it comes to talent acquisition, we must remember that it heavily relies on the data that is collected and chosen by humans, and AI is trained with machine learning algorithms that are also created by humans (Zhang, et al., 2019). Meaning, unfortunately, the humans that create these algorithms or select the data may have their own biases which could easily be reflected in the outcome of the AI.

Challenges to consider

One of the challenges to consider when it comes to AI / predictive analytics is the management of data and ensuring that data is treated with respect and that people are given the option to participate in data collecting knowingly, confidentially or anonymously. However, organisations tend to struggle to retrieve data from their employees to support their diversity and inclusion targets. This may be because some of the employees’ fear that if they disclose some of their diverse traits this could be used against them negatively, particularly when it comes to promotions or career development. Therefore, there is still a huge amount of work, organisations can do to earn employees’ trust in order to disclose such information and ensure it will not be used against them.

Another challenge for organisations is that once they have results from AI tools / predictive analytics, they should act on it to increase representation of diverse population rather than shying away from the outcome they have received, even if it is not what they expected.

Summary

In conclusion, to avoid biased output from AI tools and predictive analytics, when it comes to dealing with diversity and inclusion, it is vital that the data collected is reviewed thoroughly to identify and remove any biases prior to using it. Also, samples used to train the machines should always be trialled and modelled regularly and if need be algorithms adjusted accordingly by experts.

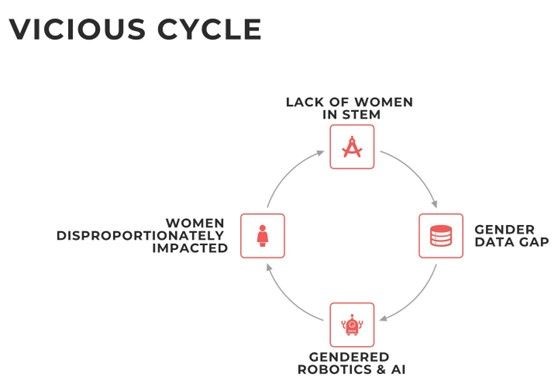

Furthermore, I would like to reflect on what Professor Aimee Van Wynsberghe presented at the 2019 WISE Campaign Conference. She displayed an image of a ‘vicious cycle’ in robotics as seen below.

This was exceptionally thought-provoking as it clearly illustrated potential consequences of how a lack of women in STEM can lead to gendered robotics & AI, which will disproportionately impact women. Aimee left us thinking whether gender roles can be emphasised through AI. I also want you to consider whether there is also a potential for machines learning to be racist. For example, a study carried out found that driverless cars are more likely to drive into black pedestrians due to the technology being designed to detect lighter skin tones (Cuthberston, 2019).

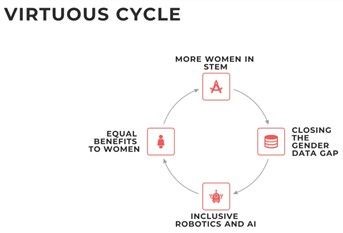

This research leads us to conclude that it is vital to have more diverse individuals including females and BAME individuals on the design teams when creating this technology, and more sitting on robot ethics boards, making decisions about our future with robots. Can we learn to create more ethical machines? If we get the balance right, this will lead to a ‘virtuous cycle’ as seen below.

Article References

Baker, B., 2013. The Next Generation of Diversity Metrics: Predictive and Game-Changing Analytics, s.l.: Diversity Best Practices.

Bourke, J., 2016. Which two heads are better than one? How diverse teams create breakthrough ideas and make smarter decisions.. Australia: Australian Institute of Company Directors.

Bourke, J. & Dillon, B., 2018. The Diversity and Inclusion Revolution – Eight Powerful Truths, s.l.: Deloitte Development LLC.

Cuthberston, A., 2019. SELF-DRIVING CARS MORE LIKELY TO DRIVE INTO BLACK PEOPLE, STUDY CLAIMS. [Online]

Available here.

[Accessed 27 September 2020].

Nico Kok, J., 2009. Artificial Intelligence: Definition, Trends, Techniques and Cases. s.l.:EOLSS Publications.

Zhang, H., Feinzig, S., Raisbeck, L. & McCombe, I., 2019. The role of AI in mitigating bias to enhance diversity and inclusion, New York: IBM Corporation.

Zojceska, A., 2018. Top 10 Benefits of Diversity in the Workplace. [Online]

Available here.

[Accessed 26 September 2020].